Comment on Cloak Affiliate Links Tutorial by SEO Dave.

For me that includes all affiliate links.

Does not include any links to my own sites (unless there’s a reason I don’t want two of my sites linked together).

Does not include links generally.

I wouldn’t worry about the Googlebot leaving your site, even if your site had no links off the domain when it’s spidered enough pages it will leave anyway and will be back at regular intervals: more incoming links you have from other indexed pages more often you’ll be spidered.

How many pages a bot will spider at a particular time seems random, the links it follows are random, so it won’t hit your home page and follow every link on the page until it runs out. Bots act like a random link clicker, hit page A and randomly click a link, over time all links are clicked.

This is why there’s an SEO Myth Google won’t follow more than 100 links from a page, the reality is Google advises not to have more than 100 links from a page because each link (on page with 100 links) has a 1 in 100 chance of being followed every time a spider hits a page.

If you have a page with 1,000 links it’s going to take a LONG time for all 1,000 links to be followed randomly and if on that page there’s a link to an important page you really want indexing and it has links from no or few other pages it might never be indexed.

Best advice on the number of links per page is it depends on the site and how many other sites link into that site (and how popular the pages are the incoming links are from). More incoming links you have more often a bot will find your site and more links it will follow within your site.

You can submit an XML sitemap to Google, but I don’t see the point, if Google can’t find the pages of your site naturally via incoming links it isn’t going to rank them well because you’ve added an XML sitemap of everything. I personally don’t want Google to find a page via an XML sitemap, if I think I’ve done everything right and a page isn’t indexed through random spidering I want to know, having it indexed via an XML sitemap means I might not realise there’s a problem. If an important page isn’t being spidered regularly it’s easily solved (when I know there’s an issue), add more links directly to that page. Remember getting a page indexed is a waste of time if it doesn’t also generate traffic.

Well, that comment went all over the place :-)

David

More Comments by SEO Dave

WordPress Cloak Affiliate Links

WordPress Affiliate Link Cloaking

I think you’ve misunderstood the Stallion link cloaking feature which is turned on under “SEO Advanced Options” : “Cloak Affiliate Links ON”.

If you have a WordPress site which links to affiliate products you don’t really want to waste valuable link …

Continue Reading WordPress Cloak Links

WordPress Cloak Affiliate Links

Affiliate Link Cloaking

Checked your website and the cloak script appears to be working as expected on the affiliate links with rel=”nofollow” attributes added by WPRobot.

The links that lack nofollow attributes won’t be converted to cloaked links. This will happen if a WPRobot …

Continue Reading WordPress Cloak Links

WordPress Cloak Affiliate Links

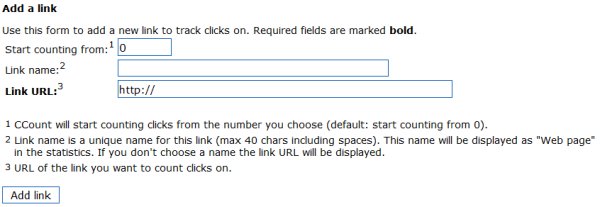

Tracking Links WordPress Plugin

The script I mention above counts the number of clicks, but it wouldn’t provide that information to your customer or track conversions.

Beyond the above Stallion doesn’t track clicks etc… I’d look for a WordPress tracking links plugin. I doubt you’ll …

Continue Reading WordPress Cloak Links

WordPress Cloak Affiliate Links

Cloak Affiliate Links Guide

I can confirm the Stallion cloaked links are setup correctly. They are meant to look and act like normal text links until you view source.

The way to check is to view source, I tend to use Firefox for browsing and …

Continue Reading WordPress Cloak Links

WordPress Cloak Affiliate Links

Cloaking Affiliate Links Tutorial

Sounds like you’ve made a mistake in the code, no idea what without seeing the code used in the test.js file and a link.

URL to the site this is on?

David …

Continue Reading WordPress Cloak Links

WordPress Cloak Affiliate Links

WordPress Blogs and the robots.txt file

The robots.txt file is about blocking and allowing access to bots to specific parts of a site. Not the same as cloaking links.

Generally speaking most entries within a robots.txt file are a waste of time. The default action is allow …

Continue Reading WordPress Cloak Links

WordPress Cloak Affiliate Links

Breaking the Link Cloaking Script

After posting the comment above had a thought.

If you aren’t using the Stallion link cloaking script for anything else you could break it so the Stallion affiliate link code isn’t converted to a clickable link by the javascript.

The result would …

Continue Reading WordPress Cloak Links

WordPress Cloak Affiliate Links

Automated Link Cloaking Script

Currently the Stallion theme has an extra options page (under the Massive Passive Profits menu : SEO) that cloaks all links the Massive Passive Profits plugin creates.

What this does is stop link benefit from being wasted through affiliate links etc… …

Continue Reading WordPress Cloak Links

WordPress Cloak Affiliate Links

Clickbank Ads Use Javascript Links

Like the AdSense ads, and Chitika ads, Clickbank uses javascript to serve it’s ads, so they are already ‘hidden’ from search engines.

If an ads built using javascript in a way that there isn’t a URL shown in the code (view …

Continue Reading WordPress Cloak Links

WordPress Cloak Affiliate Links

SEO Cloaking Affiliate Links

If you ask a question in a comment which I respond to and you don’t get it, but weeks later ask the same question on another page (or another site of mine) do you expect a different answer?

I’m not counting …

Continue Reading WordPress Cloak Links

WordPress Cloak Affiliate Links

Cloaked Affiliate Links Tutorial

It’s normal to see the URL when you hover over the Stallion Cloaked links.

If you’ve used the span type code described in the Stallion Theme Cloak Affiliate Links Tutorial above and it looks like the code above (when you edit …

Continue Reading WordPress Cloak Links

WordPress Cloak Affiliate Links

Hide URL of Link in Status Bar

You should see whatever is in the id=”” part of the link code, so if a redirected URL you see the redirect URL in the status bar. So it’s working.

There is a way to hide a link destination in the …

Continue Reading WordPress Cloak Links