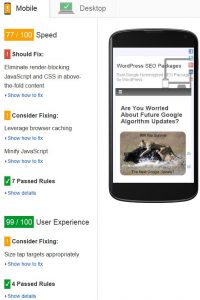

Since the release of the free Google PageSpeed Insights Tool I’ve spent a LOT of time trying to get this website to rank as close to 100/100 via the WordPress SEO Package I develop and currently (September 2014) stuck at:

User Experience 99/100

Desktop 91/100

See the Live PageSpeed Insights Results.

These are very good PageSpeed Insights results and going to be hard to improve, not because I can’t get better results, but I can’t get better results without turning important website features off.

I could get to something like these results:

Mobile 96/100

User Experience 100/100

Desktop 98/100

By turning a few website features off (would take two minutes to achieve) including the Facebook and Google+ social media buttons, Google Analytics tracking code and the up/down scroll arrows (bottom right corner of the screen). This would leave just one render blocking CSS file issue which in practice can’t be fixed: there’s a fix (use a LOT of inline CSS), but the fix is worse than the issue it fixes. I can live with one render blocking CSS file considering many websites in this niche (my competition) have over a dozen render blocking CSS/JS files!

Also see the PageSpeed Insights Size Tap Targets Appropriately (part of the User Experience metrics).

How to Improve PageSpeed Insights Results?

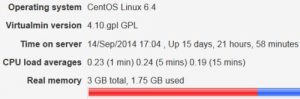

To achieve these results this website runs on an Apache webserver running Centos 6.4 with 3GB RAM, there’s nothing special about the server (it’s not even a dedicated server), it’s a low cost VPS from Godaddy which runs around 50 of my domains and costs $71.99 a month including tax. I’ve run my websites on $300 a month dedicated servers and have in practice found the Godaddy VPS to be better for ME!

It is important to use an OK host/server since an oversold hosting package will impact pagespeed: you get what you pay for, there’s only so much you can do if your host is under powered and/or oversold, what I use is midrange, not cheap or expensive, it works and is a good test for improving PageSpeed Insights results without spending a fortune.

This website runs:

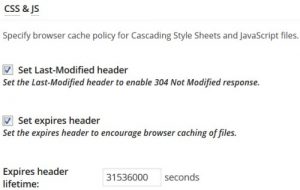

Apache Webserver with the following Apache modules active and configured correctly: mod_deflate.so (compresses files like js and CSS files) and mod_expires.so (sets cache headers so files can be cached by a browser locally). Most hosts will have these modules active, but might require some settings modifying for maximum performance: for example I had an additional rule to mod_expires.so for javascript files, the setup was lacking full support for javascript files (there was support for application/javascript and application/x-javascript but was lacking support for text/javascript).

There’s a good overview video from Google PageSpeed Insights.

It goes a long way to show what you need to be looking at for tracking down PageSpeed issues and though not detailed in how to fix them, does point in the right direction. Well worth watching a few times to get a feel for what you are trying to achieve.

You should note from the video there are elements to pagespeed no WordPress SEO package, SEO theme, SEO plugin can help you with, they have no access to your webserver and can’t turn the mod_deflate.so Apache module on if your host doesn’t use it for example.

WordPress Performance SEO Setup

WordPress 4.0 : ALWAYS update when available.

The Stallion Responsive WordPress SEO Package : Currently v8.1 (September 2014).

W3 Total Cache WordPress Plugin : Very important performance SEO plugin, caches and minifies a lot of what is served to visitors (cached and minified means runs faster). If this plugin didn’t exist I would have to create the same features into the WordPress SEO Package I develop.

EWWW Image Optimizer WordPress Plugin : Important plugin to optimize images, basically makes them smaller in size (not smaller in width/height). There are plenty of WordPress plugins to optimize images or you could optimize images on your computer before adding to WordPress. What matters is all images are optimized.

I use a couple more plugins all the time like “Subscribe to Comments Reloaded” and an ecommerce plugin for selling Stallion Responsive, but these have no major impact on performance/pagespeed.

And once or twice a month run the “WP Optimize” plugin (optimizes the WordPress database) and “Broken Link Checker” plugin (checks for broken links). The WP Optimize plugin will have an impact on performance, a clean/optimized database means it runs faster, broken links per se have no performance impact, but important SEO wise to suggest you maintain your site. Lots of broken links suggests the site isn’t maintained which is probably one of the 200 ranking factors Google uses.

Performance vs Website Features

You have to be careful what plugins and features you add to a WordPress site, so many WordPress themes and plugins are poorly built with no thought to performance SEO and generate dozens of render-blocking JavaScript and CSS in above-the-fold content issues, load non-cached Gravatars on comment pages generating hundreds of leverage browser caching issues.

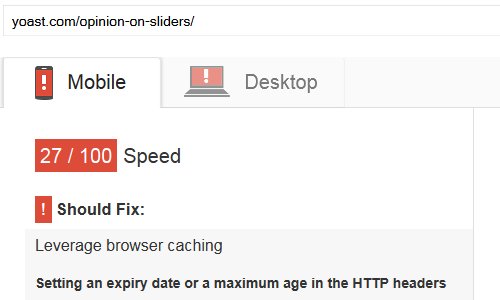

Look at the performance damage the Yoast WordPress SEO plugin causes (or to be more precise the Yoast plugin doesn’t prevent) on Yoast’s own website:

Plugins like Yoast SEO should be avoided, see Is Yoast the Best WordPress SEO Plugin? for details.

It’s why I manually turn on the WP Optimize and Broken Link Checker plugins, I don’t need those features running 24/7, 365 days a year, only need them a couple of times a month.

If you don’t use or really need a feature, TURN IT OFF.

Even the WordPress SEO Package I’ve developed with performance SEO in mind will add avoidable PageSpeed Insights issues if some optional website features are active. For example Stallion Responsive includes the option to add up to 7 social media buttons:

Facebook Like Button

Twitter Tweet Button

Google +1 Button

Stumbleupon Button

Reddit Button

Linkedin Button

Pintrest Button

Almost all of these generate PageSpeed Insights issues related to “Leverage browser caching” and “Minify JavaScript” because the scripts (javascript and CSS files) that create the buttons are hosted offsite (they aren’t on our websites) we have zero control over how they are cached: we can’t change how long Google sets it’s Google+ Like button to cache (currently set at 30 minutes).

The question for the social media like buttons above is: Are the PageSpeed Insights Issues worth the website feature?

I use Facebook, Google+ and Twitter like buttons on this website and take the small performance hit. If I had an image intensive site that would be shared on Pintrest I’d add the Pintrest button as well, but the sort of images I add to articles here aren’t the type to be shared very often, so not worth the performance hit. Basically I’ve gone with the big three, Facebook, Google+ and Twitter because they are the big three.

These result in the following issues I can not remove:

Leverage browser caching

Setting an expiry date or a maximum age in the HTTP headers for static resources instructs the browser to load previously downloaded resources from local disk rather than over the network.

Leverage browser caching for the following cacheable resources:

## https://apis.google.com/js/api.js (30 minutes)

## https://apis.google.com/js/plusone.js (30 minutes)

## https://oauth.googleusercontent.com/…e:rpc:shindig.random:shindig.sha1.js?c=2 (60 minutes)

## http://www.google-analytics.com/analytics.js (2 hours)

Note: the Google Analytics script is related to Google Analytics not the Google+ like button. These are issues because the cache is set at a short period of time, longer the cache is set (a year for some resources) less often your visitors have to download that resource from the original server.

Minify JavaScript

Compacting JavaScript code can save many bytes of data and speed up downloading, parsing, and execution time.

Minify JavaScript for the following resources to reduce their size by 1.7KiB (2% reduction).

Minifying https://fbstatic-a.akamaihd.net/rsrc.php/v2/y0/r/xQ4dJpNtukO.js could save 1.1KiB (2% reduction) after compression.

Minifying https://oauth.googleusercontent.com/…e:rpc:shindig.random:shindig.sha1.js?c=2 could save 661B (3% reduction) after compression.

If I turned off the Google+ button 4 of the 6 issues above would be fixed.

Turn off the Facebook like button another issue fixed.

Remove Google Analytics tracking code and all 6 issues resolved.

When NOT to Fix PageSpeed Insights Issues

Although these are PageSpeed issues they aren’t actually important issues, the impact is negligible. Let’s take the Leverage browser caching issues related to http://www.google-analytics.com/analytics.js (2 hours).

This is an issue because a visitor to your site will cache the analytics.js file for 2 hours, so if they stay on your website 1 hr 59 minutes browsing 100+ webpages they won’t have to download the analytics.js file on every webpage load (will be served from their browser cache locally). You can see for most visitors even if they visit dozens of webpages they only download the file once.

It gets better. I’m not the only website that runs Google Analytics, so there’s a very good chance when you visited my website you’ve been to another website in the past 2 hours that’s also running Google analytics and you already have the analytics.js file cached locally and won’t have to redownload it while visiting my website. Obviously after 2 hours it needs recaching, but the odds are most of the time it won’t be cached while any one visitor visits my website. In affect this and similar PageSpeed issues with popular resources like the analytics.js file can be ignored. Same argument for the https://apis.google.com/js/ files, though those are set to cache every 30 minutes (no idea why so frequent).

Let’s take a look at the Minify JavaScript issues, note the possible savings:

Minify JavaScript for the following resources to reduce their size by 1.7KiB (2% reduction).

If the issue was fixed by Facebook and Google fully minifying those JS files it would save visitors downloading 1.7 KiB extra. If you aren’t familiar with file sizes saving 1.7 KiB will have no impact on performance.

When a JS or CSS file is fully minified it basically means all the tabs, extra spaces and carriage returns have been removed. Load the FaceBook JS file below in a browser and you’ll see it’s contents:

File no longer exists

Note how there are blank lines, to fully minify this FaceBook would remove all the blank lines and carriage returns and it would make the file 1.1 KiB smaller (just 2% smaller), to put this into perspective the file as it is is 210.96 KiB (uncompressed) and significantly smaller compressed (they will be using something like mod_deflate.so or gzip to compress the file to about 110 KiB). You can see this issue is a tiny issue, you are downloading the equivalent of a small image icon worth of data extra.

Ideally Facebook, Google+ etc… would fully minify their resources, but the amount saved is small. Also you’ll note file isn’t listed as an issue under “Leverage browser caching” meaning it has a cache header set in the future, relevant info below (it’s set a year from now roughly).

* Date = Sun, 14 Sep 2014 13:07:34 GMT

* Expires = Mon, 07 Sep 2015 15:09:28 GMT

* Last-Modified = Mon, 01 Jan 2001 08:00:00 GMT

Which means though our visitors have to download an additional 1.1 KiB of data due to Facebook it might only be once a year a visitor actually redownloads that file.

PageSpeed Insights and Google Rankings

When looking through your PageSpeed Insights Results you have to move past just trying to get to 100/100 and consider what the impact of the issue will be. I can not believe Google takes the raw numbers like 91/100 and uses them as a ranking factor per se.

The Google PageSpeed Insights results are a blunt tool for webmasters to indicate where important issues COULD be. If Google and Facebook modified the resources discussed above so they are cached further in the future and fully minified our visitors are still having to download the resources (the Facebook JS file compressed in over 100KiB which isn’t small) and Google will take the resource impact into account.

We could create 100 javascript and CSS files each 1MB (that’s big) in size, give them cache headers a year from now, load them asynchronously (removes the render blocking warnings) and those 100 1MB files wouldn’t generate Google PageSpeed Insight Issues. What sort of impact do you think loading 100 1MB files would have on your websites performance especially when loading on a slow 3G mobile network!!!

Seems Google is moving towards wanting webmasters to offer minimalistic websites, less you force your user to download, faster your webpages load which is good for visitors (especially mobile device users).

Just look at the Google home page and it’s minimalistic design, yet it’s the most popular website on the Internet because it gives users what they want.

http://developers.google.com/speed/pagespeed/insights/?url=https%3A%2F%2Fwww.google.com&tab=mobile

David Law

w3 cache pulgin

And In w3 total cache i faced this error

Recently an error occurred while creating the CSS / JS minify cache: A group configuration for “include” was not set.

Thanks